Is Your Cloud Account Funding the Next Forever War?

U.S. techno-capitalism has long fused commercial innovation with large-scale systems of military power

From Cold War Control Rooms to the Cloud

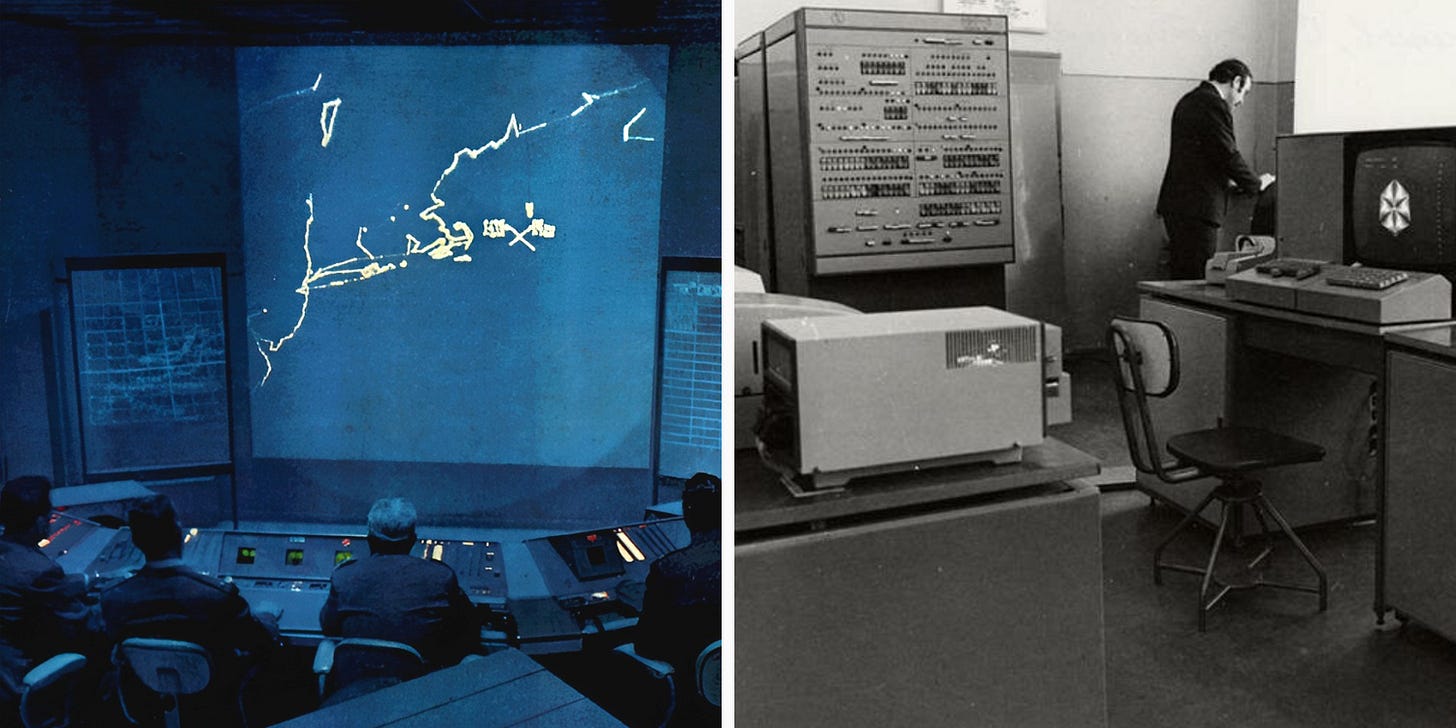

If today’s data centers and satellite communication hubs embody national security and surveillance—like the NSA’s massive data center complex—then blinking consoles and sprawling control rooms captured the Cold War imagination of computing. In the Cold War United States, this took its first form in the Semi-Automatic Ground Environment (SAGE), a 1950s defense project linking dozens of IBM machines into a continent-wide radar and missile coordination grid—the first truly networked computer system, radiating centralized command and control from the heart of the military-industrial complex.

Across the Iron Curtain, Soviet planners pursued their own cybernetic dream: the National Automated System for Computation and Information Processing (OGAS). Advocates promised a single automated brain capable of “continuous optimal planning and control” across the entire socialist economy. To American observers, OGAS appeared less like a tool of rational coordination than the blueprint for a cybernetic Leviathan. CIA analysts warned of “closed-loop, feedback control” that could allow Moscow to outpace American capitalism. Even Arthur Schlesinger Jr., Kennedy’s liberal consigliere, fretted that Moscow’s machine planning might outpace American capitalism. One Air Force commander put it in starker terms—such a system could be “imposed upon us from an authoritarian, centralized, cybernated, world-powerful command and control center in Moscow.”1

The irony, of course, is that the nightmare Schlesinger feared was not imposed from Moscow at all. It was built in California. Neoliberal America, not Soviet socialism, produced the global digital infrastructure that now governs daily life and state power alike. What we call the “cloud”—a planetary-scale lattice of semiconductors, networks, data centers, and software platforms—is precisely the kind of totalizing system Americans were taught to fear. It coordinates production and consumption, organizes supply chains, captures and processes vast streams of behavioral data, and increasingly integrates advanced artificial intelligence. In short, it is a distributed apparatus of planning and control—but one linked to corporate profit and national security.

Today, there is no command post or blinking console to dramatize power; control has been diffused into global networks and condensed into the devices we carry in our pockets. Yet the Cold War visions of SAGE and OGAS remind us that computation was never just about calculation—it was about command. By the 1980s, that logic had hardened into economic policy. The question was no longer whether technologies might spill across civilian and military lines, but how national survival itself would hinge on securing the commercial base of electronics, chips, and the minerals that made them possible.

Techno-Nationalism and the Material Base

The phrase “dual-use”—popularized in U.S. Cold War policy to describe technologies with both civilian and military applications—suggests a neat division between the two domains. In practice, much of modern industry has always carried war within it. Railroads were designed to move troops as readily as they carried commuters. Fertilizers such as ammonium nitrate and other nitrogen compounds fed crops but also provided the chemical base for explosives and munitions. From the start, industrial capitalism blurred the line between commerce and conflict. The roots of Silicon Valley, and of the broader computer industry, were likewise seeded by military contracting, beginning with Navy funding for radio research around the Spanish-American War. Missiles, telecommunications, and surveillance systems grew from this foundation and were continuously sustained by defense budgets throughout the twentieth century.

By the late twentieth century, the relationship between military and commercial technology shifted but remained deeply institutionalized. Facing ballooning costs, U.S. defense agencies in the 1980s and 1990s began adopting the “commercial off-the-shelf” (COTS) policy, sourcing widely available electronics for weapons systems rather than commissioning bespoke designs. The logic was pragmatic: Silicon Valley was already producing advanced components at scale, so the military could leverage private innovation rather than duplicate it. But the consequences ran deeper. National security now depended not only on traditional defense contractors but also on the stability and competitiveness of the commercial technology sector itself.

This shift collided with a new anxiety. In the 1980s, as corporate America was looking to undercut its own domestic manufacturing base in a push for more overseas branch plants and outsourcing, Japanese firms were overtaking U.S. firms in semiconductors. Suddenly, what had been treated as consumer goods—memory chips, microprocessors—were recast as lifelines of national industrial survival. A 1984 National Research Council report titled Race for the New Frontier warned that “the U.S. advanced technology enterprise… must be held as one of the country’s most valued objectives.”2 The Defense Science Board went further, declaring that U.S. forces “depended heavily on technological superiority in electronics; that semiconductors were the key to such leadership; [and] that this depended on strength in commercial markets.”3

This techno-nationalist turn4 also extends to raw materials—the foundation of any nation’s ‘industrial base.’ Due to material shortfalls and restrictions leading up to and during World War II, Cold War planners feared bottlenecks in materials including cobalt, tungsten, and rare earths. Well into the Reagan era, entire programs were devoted to monitoring and stockpiling what were deemed “strategic” minerals.5 Decades later, these concerns returned with force. In 2017, the Trump administration issued an executive order naming 35 “critical minerals” essential to both economic growth and military capacity. The United States Geological Survey identified 50, while the Pentagon tracked more than 250, “strategic and critical” materials defined as “those that support military and essential civilian industry” (with a special focus on semiconductor production in Taiwan and Korea), identifying many concentrated in Chinese supply chains.

Seen this way, dual-use is not just about chips or drones. It is also about the material foundations of modern industry—foundations governments treat as matters of national security. Conventional warfare required enormous inputs of steel, fuel, and minerals. But by the late Cold War, defense strategists began to argue that the decisive raw material was shifting. The future of war, it was claimed, would hinge not only on industrial stockpiles but on the ability to capture, transmit, and process information.

In the 1990s, this logic crystallized in what U.S. defense thinkers called a “Revolution in Military Affairs.” Admiral William Owens predicted that networks of sensors, satellites, and algorithms could dissolve the ‘fog of war’ altogether, making victory flow not from sheer firepower but from information itself.6 As asymmetric warfare and counterinsurgency replaced conventional battlefields, the raw material of intelligence became data—and the infrastructures for harvesting and analyzing it were increasingly concentrated in Silicon Valley.

Silicon Valley Goes to War (Again)

The techno-nationalist anxieties of the 1980s thus found their proving ground in the 2000s. The dot-com crash had left Silicon Valley adrift—trillions in market value evaporated, startups shuttered, and engineers scattered. Then came 9/11. Federal budgets for intelligence, surveillance, and homeland security exploded, with the Department of Homeland Security alone requesting more than $40 billion by 2005. For firms desperate for cash flow, empire offered a new business model.

Commentators Mark Mills and Peter Huber captured the moment bluntly in City Journal, celebrating the “trillion-dollar infrastructure of semiconductor and software industries, with deep roots as defense contractors.” To them, dual-use surveillance was the embodiment of American exceptionalism. At home, these “technologies of freedom” would guarantee security and liberty; abroad, they would “destroy privacy everywhere we need to destroy it.” Their conclusion was striking in its candor: “it will end up as their sons against our silicon. Our silicon will win.” U.S. empire now marched openly under the banner of Moore’s Law.

The War on Terror didn’t rescue Silicon Valley wholesale—Google’s advertising engine and Apple’s iPhone did that—but it gave a crucial subset of firms and engineers a steady lifeline. In 1999, the CIA created In-Q-Tel, a venture arm designed to channel private innovation into the intelligence community. One of its first investments was the geospatial startup Keyhole, whose EarthViewer platform was later deployed in Iraq and ultimately acquired by Google to become Google Earth. In 2003, Palantir emerged directly from counterterrorism demand, with U.S. intelligence agencies funding and piloting its data-fusion platforms before they became indispensable in Iraq and Afghanistan and later in commercial markets. A year later, DARPA’s Grand Challenge poured federal resources into autonomous vehicle experiments, cultivating teams and technologies that would ultimately seed Google’s self-driving program and Uber’s foray into automation. Together, these initiatives provided legitimacy, stability, and cash during the lean years between the dot-com bust and the rise of Web 2.0 consumer platforms, anchoring a defense-driven pipeline of talent and technologies that would later spill into the commercial mainstream.

What began as stopgap lifelines in the wake of the dot-com crash hardened into structural dependence, and by the 2010s, these relationships had been institutionalized. The Defense Innovation Unit, headquartered in Mountain View, funneled billions into startups working on drones, AI, and cyber defense. Congress codified the fusion with the 2018 CLOUD Act, compelling U.S. cloud providers to share data stored abroad. In 2022, the Pentagon signed a $9 billion Joint Warfighting Cloud Capability contract with Google, Amazon, Microsoft, and Oracle, effectively deputizing them as military infrastructure. Eric Schmidt, Google’s former CEO, captured the reality with unusual candor: “Because of the way the system works, I am now a computer scientist, businessman, and an arms dealer.”

The Chip Wars

If the militarization of the cloud revealed how digital networks were woven into the architecture of war, semiconductors show the same condition at a deeper level. Chips are not merely components of everyday devices; they are the substrate of twenty-first-century power. Every missile system, drone swarm, and AI model is only as capable as the processors inside it. Silicon Valley had already ceased to be a civilian sector occasionally tapped for defense. By the 2010s, it had become a critical extension of the national security state—and semiconductors stood at the center of this fusion.

First, indispensability. Chips underpin every frontier of military technology. The CHIPS Act of 2021 and the CHIPS and Science Act of 2022 together committed more than $50 billion to domestic capacity, with Intel receiving billions specifically for “secure enclave” chips designed for Pentagon use—an example of how industrial subsidies also function as defense procurement. This logic is also behind the U.S. government’s recent $8.9 billion stake in Intel to support its floundering foundry program. By contrast, Taiwan’s TSMC has long dominated the foundry model that underwrites much of the U.S. military-industrial complex. Yet Taipei remains cautious about overcommitting TSMC to defense production, wary of eroding its “silicon shield”—the geopolitical leverage it derives from being indispensable to global chip supply.

Second, concentration. For decades, U.S. dominance rested on this sort of a hybrid model—corporate leadership at home (Intel, Qualcomm, Micron) paired with offshore fabrication in Japan, South Korea, and above all Taiwan. This distributed supply chain maximized efficiency but created acute vulnerabilities. China’s Made in China 2025 program underscored the fragility, as Beijing poured subsidies into fabs and design houses to close the technological gap. Although China’s pursuit has been bumpy, the prospect of it catching up in advanced nodes set off alarms in Washington, where the lesson of the War on Terror was simply that national security could not be disentangled from corporate innovation.

Third, alliances. While the U.S. response to Chinese advances (and COVID-19 bottlenecks) was nothing short of mobilization, allies were also pulled into orbit while looking to secure their own industrial base. Japan lured TSMC into Kumamoto with billions in subsidies; South Korea rolled out tax relief for Samsung and SK Hynix; Europe launched its own Chips Act; and India pledged $10 billion to jump-start domestic production. These initiatives also coalesced into the “Chip 4 Alliance,” a geopolitical bloc aimed at fencing off China from the commanding heights of semiconductor production.

Arizona offers a rather compelling case on these points. TSMC’s $165 billion Phoenix megafab is not just about supply-chain “resilience.” NVIDIA’s GPUs, built on TSMC manufacturing processes, are increasingly embedded in military AI workloads, from Navy procurement of H100 accelerators to autonomous systems developed by defense startups like Anduril. Xilinx’s FPGAs—long fabricated by TSMC—show up in satellites and radios used by Raytheon and Northrop Grumman. Geographically, Arizona sits between the bomb-making complexes of New Mexico and the aerospace hubs of Southern California. Its desert is becoming a new frontier for a fusion of commercial electronics and military power.

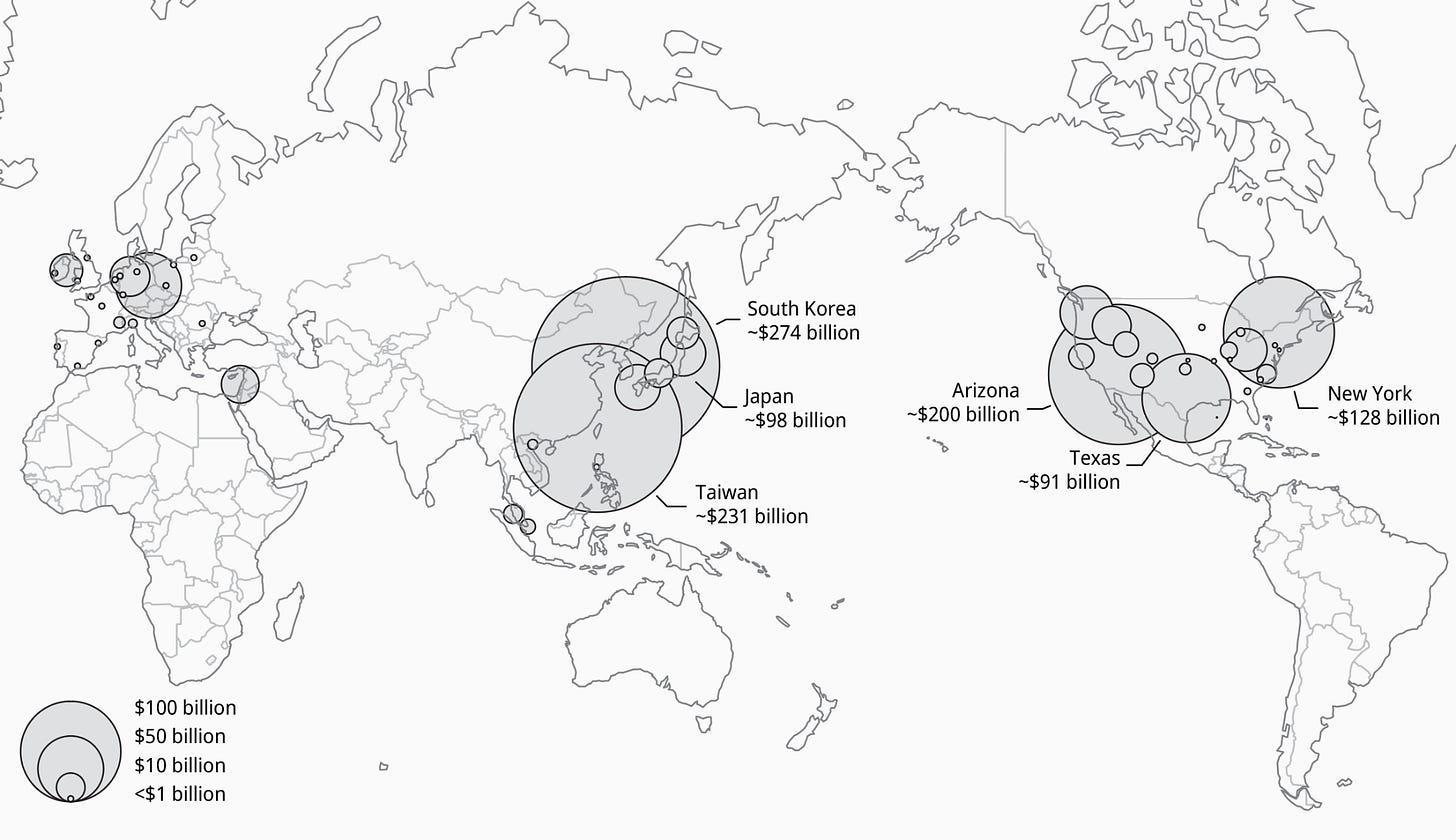

Between 2021 and 2025, announced semiconductor investments exceeded $630 billion in the U.S. alone. The global scale of investment is also staggering. Samsung’s new Pyeongtaek campus nears $100 billion—second to TSMC’s campus in Arizona. Taiwan also continues to invest heavily, with TSMC planning $100 billion in new projects. These are not just factories; they are massive territorial projects reshaping entire regions, from the deserts of Arizona to the Korean peninsula.

Map of current and announced semiconductor investments 2021 to 2025

Data sources: SEMI 2023, GMFUS 2023, SIA 2025

The Condition of Modern Techno-Statecraft

Charles Wilson, the former president of General Motors and later Secretary of Defense, famously captured mid-century corporate nationalism with his remark: “What’s good for General Motors is good for America.” Decades later, amid intensifying U.S.–China tensions, this logic resurfaced in a new register when private equity founder and current U.S. ambassador Thomas Barrack declared in 2017: “What’s good for America is good for the world.” Both statements reflect a persistent conflation of corporate power, national interest, and global order. As Tony Smith observed, the dominance of American techno-capital has produced a global market structure in which “a handful of giant First-World oligopolies operating at or near the frontier of scientific-technical knowledge” dictate terms to “small-scale Third-World producers far from that frontier.”8

Seen in this light, the empire Silicon Valley has built makes the Cold War imagination of Soviet planning appear almost quaint. Semiconductors—and the cloud infrastructure they underpin—are no longer just another industry; they form the architecture of twenty-first-century power. Control over chip supply chains now equates to control over commerce, surveillance, and war alike. What began as ad-hoc procurement strategies and techno-nationalist subsidies has since hardened into a regime of authoritarian techno-statecraft, where defense budgets, corporate R&D, and industrial policy converge into one seamless system of power projection.

Lewis Mumford foresaw this trajectory in 1967 when he described the rise of “authoritarian megatechnics,” warning that a “dominant minority will create a uniform, all-enveloping, super-planetary structure, designed for automatic operation…” that would subordinate individuals to vast systems of control.9 That warning no longer reads as dystopian speculation but as a description of our present. The cloud that stores family photos is the same one that processes drone surveillance. The chips powering video games also drive missile guidance. Convenience and coercion now run on the same circuits.

The war in Gaza shows these dynamics with brutal clarity. Google and Amazon’s $1.2 billion Project Nimbus contract arms Israel’s Ministry of Defense with cloud and AI capacity, while Microsoft, IBM, HP, and Palantir provide the infrastructure for military data, surveillance, and population control. Worker resistance has flared—Google employees forced the company to back away from Project Maven, and Amazon and Google staff protested Nimbus—but these efforts have barely dented the machinery of militarized computing.

And the fusion is not limited to foreign battlefields. The very tools of empire are increasingly turned inward. Palantir’s $30 million ImmigrationOS contract with ICE is designed to consolidate sensitive federal data to streamline deportations, raising alarms about civil liberty violations and mass surveillance. Lawmakers have also pressed Palantir over its role in building a government-wide “mega-database” linking IRS and agency records—“a surveillance nightmare” in the words of its critics. Meanwhile, Google, Amazon, and Microsoft quietly supply cloud services to ICE and CBP, facilitating border surveillance even as their own employees protest.

As consumer and enterprise markets plateau, Big Tech’s profitability hinges on public subsidies, defense contracts, and a steady pipeline of militarized demand. The new industrial strategies around AI and semiconductors make this dependence explicit—fast-tracking energy- and land-intensive buildouts, channeling public funds into cloud and chip monopolies, and using export controls and subsidies to project U.S. corporate power abroad. This dependence tightens the knot between corporations and the state, ensuring that the military-industrial complex is not just a customer but a guarantor of profitability. And the deeper this entanglement grows, the harder it becomes to imagine a technological future driven by public needs rather than by the imperatives of empire.

References

Gerovitch 2010, cited in Vincent Mosco, To the Cloud: Big Data in a Turbulent World (Routledge, 2015).

National Research Council, The Race for the New Frontier: International Competition in Advanced Technology (New York: Simon & Schuster, 1984): 6.

National Research Council, U.S.-Japan Strategic Alliances in the Semiconductor Industry: Technology Transfer, Competition, and Public Policy (National Academies Press, 1992): 85.

OECD, Strategic Industries in a Global Economy: Policy Issues for the 1990s (OECD, 1991).

See reports of the President’s International Materials Policy (Paley) Commission: Materials Policy Commission, Resources for Freedom Volume 1: Foundations for Growth and Security, 1952. In addition to recommendations for foreign and industrial policy, the final reports also advocate for continued public investment in technology as it claims that “the strongest and most versatile single resource in the fight against scarcities of materials is technology.” (132)

William A. Owens, “System of Systems,” Armed Forces Journal, January 1996.: 47; On Owens’ views on RMA, see William A. Owens, High Seas: The Naval Passage to an Uncharted World (Naval Institute Press, 1995). Owens would go on to serve as president, COO, and vice chairman of the defense contractor Science Applications International Corporation (now Leidos) as well as various positions in other telecommunications and technology companies.

Schmidt, Eric. “The Age of AI.” presented at the Stanford ECON295/CS323 Course, August 15, 2024. A recording can be found here.

Tony Smith, Globalisation: A Systematic Marxian Account (Brill, 2005): 173.

Lewis Mumford, The Myth of the Machine: Technics and Human Development (Harcourt, Brace & World, 1967): 3.