Who Profits From the AI Boom? Following the Money in the Cloud Economy

Tracing how debt, utilities, land, and chips shape the uneven flow of profits across the AI stack

Where does the money really accrue in the AI buildout—and who ends up carrying the risk? These sorts of questions keeps coming up in conversations with students and colleagues about the political economy of the AI bubble. So let’s try to map how value flows through the AI ecosystem—let’s follow the money in the cloud economy.

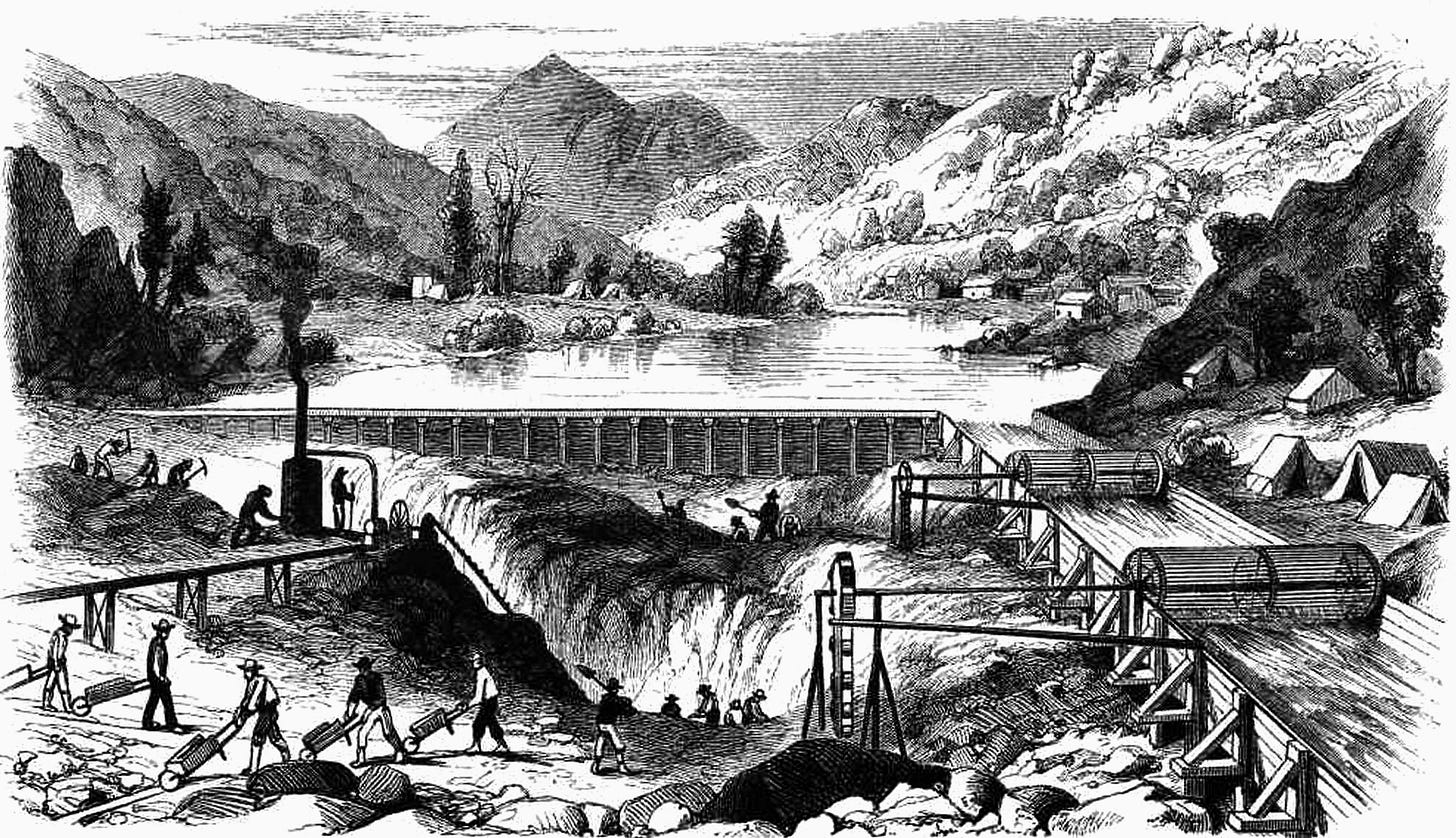

During the California Gold Rush of the mid-19th century, tens of thousands of prospectors streamed west in hopes of striking it rich. Most miners never found fortunes and many barely covered their costs. Some struck it rich, but it was the merchants who sold them supplies—picks, shovels, boots, food, and boarding house beds—who earned steady profits. Equipment manufacturers, saloon owners, transport providers, and land speculators often captured more value than the miners themselves. Even financiers entered the scene, extending credit and taking stakes in mining operations, profiting when mines produced and losing heavily when they did not. The Gold Rush is remembered as much for this supporting economy as for the glitter of the metal itself.

The Gold Rush is a useful shorthand for today’s AI boom. Like many observers, I use it to spotlight the key economic actors in a boom-bust system. If AI labs and startups are the prospectors—highly visible yet often unprofitable, burning cash and tied to long-term obligations—then the hyperscale clouds are the merchants, selling compute, storage, and services no matter which lab wins. Utilities and real estate investors, meanwhile, extract rents for scarce power and strategically located parcels. Profits climb further upstream to the firms that control the critical bottlenecks—Nvidia and TSMC, the shovel makers of the moment, capturing extraordinary margins in chip design and fabrication. And just as nineteenth-century financiers magnified boom-and-bust cycles, debt markets today fuel GPU purchases and speculative buildouts.

The rise of AI has led to trillion-dollar valuations and promises of revolutions in work, education, medicine, and security. Yet beneath the headlines lies an economy where value is captured unevenly—by those who provide the tools, platforms, and territory, rather than by the firms building the applications themselves (at least so far). To map this distribution, I start downstream with the model labs and move up the stack—through hyperscale clouds, utilities and landlords, to chip designers and foundries—with finance capital underwriting and shaping the whole system.

The miners burn cash while the merchants, manufacturers, and financiers cash in.

AI labs and independent clouds in the red

The most visible companies in the AI boom are often the least profitable. OpenAI, Anthropic, Cohere, and similar labs dominate headlines with their conversational models and enterprise deals. Yet their financial statements tell a more complicated story.

OpenAI, for example, is estimated to be generating revenue at an annualized pace of around $12–13 billion in 2025, yet analysts project it will also burn roughly $8 billion in cash over the same period. Anthropic faces a similar imbalance, with multi-billion-dollar annualized revenue but heavy operating losses. The gap reflects the staggering cost of compute. Training a new generation model can require investments in the hundreds of millions, while day-to-day inference demands continuous access to fleets of high-end GPUs housed in specialized data centers.

To secure this capacity, AI labs sign multi-year cloud contracts. OpenAI’s widely reported $300 billion agreement with Oracle is the most dramatic example. Economically, these contracts resemble debt—they are binding obligations to pay for compute whether or not the revenue materializes. Call it “cloud debt.” Like financial leverage, it magnifies returns if demand grows, but it can also sink firms if revenues lag behind.

The Financial Times recently reported that an $11 billion debt market has emerged around GPUs themselves. Independent providers borrow against Nvidia chips, using them as collateral for loans. CoreWeave, a fast-growing GPU cloud, has raised more than $7.5 billion in debt financing to fund expansion. In one recent quarter, it posted revenues over a billion dollars yet still recorded a net loss of nearly $300 million, mostly due to interest expenses. Debt enables rapid growth but also concentrates risk in firms whose assets and revenues are tightly tied to volatile AI demand.

In short, downstream labs and independent clouds carry the heaviest financial risk. They finance the system through multi-year contracts and leveraged debt, but the revenues they may generate leak quickly upstream.

Hyperscale clouds as intermediaries and strategists

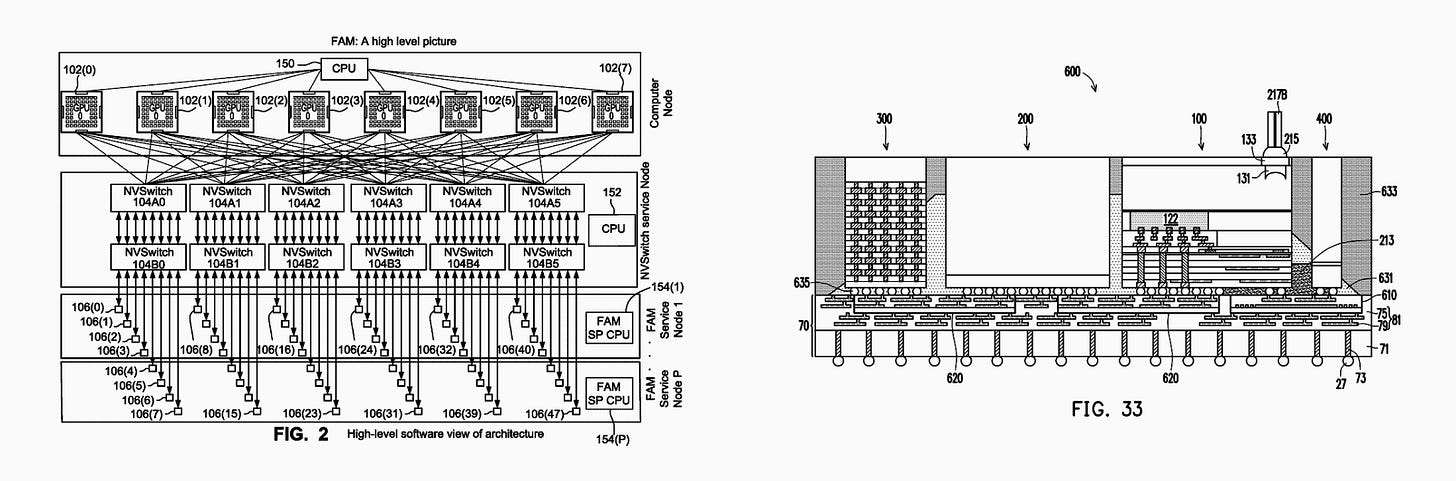

Above the labs sit the hyperscale cloud providers—Amazon Web Services, Microsoft Azure, and Google Cloud. These companies purchase chips from Nvidia and TSMC, acquire land, secure electricity, and operate vast data centers. They then rent out “compute” to enterprises, startups, and AI labs through a mix of on-demand access, reserved instances, and managed AI services.

Financially, the clouds are on far firmer ground than their downstream customers. In its most recent quarter, AWS posted over $10 billion in operating income. Google Cloud reported nearly $3 billion, at margins above 20%. Microsoft said its cloud division’s gross margin was nearly 70%, although it noted a decline due to the heavy capital expenditures needed for AI infrastructure.

These firms are investing at a historic pace. Alphabet has guided $85 billion in capital expenditures for 2025, while Microsoft and Amazon are on similar trajectories. These budgets cover not only chips but also land, grid connections, fiber backbones, cooling systems, and transmission upgrades. Their profitability depends less on scarcity than on utilization. If customers keep servers busy around the clock, the economics work; if demand softens, depreciation and energy costs can quickly erode margins.

Yet clouds are not simply passive intermediaries. As Semianalysis has noted in its study of Oracle’s strategy, cloud providers actively shape their economics through site selection, data center design, and network efficiencies. Locating a facility near cheap power and abundant land can cut total cost of ownership dramatically. Optimizing internal networking can reduce the price of training large models by hundreds of millions. These strategic choices enable cloud providers to capture more value than their downstream tenants, who face fixed prices for capacity.

UBS analysts claim that “profitability is largely decided by performance.” The clouds that can offer the fastest, most reliable AI infrastructure command premium pricing, because enterprises value latency and throughput. This further entrenches the position of hyperscalers, who have the scale and capital to deliver best-in-class performance. Smaller players and AI labs either aren’t equipped in the first place or struggle to match these sorts of advantages.

Utilities and real estate extract resource rents

As I’ve been writing about extensively, the limits to scaling AI extend beyond silicon to include power, cooling, and water. Each data center is essentially a warehouse of GPUs that must operate continuously under heavy energy and thermal stress.

Utilities are critical in the geography of AI growth. Hyperscale firms contract for power at the scale of hundreds of megawatts, while in Northern Virginia—home to the world’s largest data center cluster—Dominion Energy is committing tens of billions of dollars to new substations and transmission lines to keep pace with demand. In Virginia, regulators have even required that transmission upgrades be completed before new loads may be connected. Similar dynamics are evident in Ohio, where AEP forecasts data center demand rising from ~600 MW in 2024 to nearly 5 GW by 2030, leading regulators to adopt new interconnection tariffs and billing rules. Because utility capital expenditures are typically recovered through regulated rates, part of the cost of private data center and AI expansion may be effectively socialized onto local ratepayers.

Real estate is increasingly strategic in the expansion of AI infrastructure. Parcels with direct access to high-capacity transmission lines, substations, and fiber backbones are in short supply, driving up competition and valuations. Developers and investors highlight proximity to substations and transmission corridors as key factors that lower construction costs and accelerate deployment. Large land acquisitions—such as recent campus-scale purchases in Ohio—are marketed as “rare and valuable” precisely because of these locational advantages.

In particular, specialized data center real estate investment trusts (REITs) have also become consistent beneficiaries of this trend, capturing higher lease rates in power- and land-constrained markets. Large players like Digital Realty and Equinix reported Q2 2025 net incomes of $1.05 billion and $368 million, respectively, though Digital Realty’s figure was lifted by one-time gains rather than recurring operations. Their economics hinge less on software breakthroughs and more on geography and infrastructure—control of scarce, grid-adjacent sites with fiber, cooling, and room to expand—so they monetize AI demand through long-term leases even as tenants shoulder most model and product risk. Hyperscalers may also lease large blocks from data center REITs, shifting some development/financing and delivery risk to the landlord.

While utilities and REITs may not command Nvidia-level margins, they capture value by controlling essential inputs like resources and land. They shape where the AI economy grows, how quickly new capacity comes online, and who pays for the supporting infrastructure.

To the chipmakers go the surplus

At the very top of the stack sit Nvidia and TSMC and similarly situated firms. Together they represent the most striking case of value capture in the AI economy.

Nvidia designs the graphics chips (GPUs) that train and run today’s AI models. In its most recent blowout quarter (Q2 2025), Nvidia reported $46.7 billion in revenue, with $41.1 billion coming from data-center sales alone. Its profit margins are unusually high for a typical hardware maker—more like what you’d expect from a software company. While its chips are both highly capable and in short supply, its success is due to its monopoly over the CUDA software framework, now the default for machine learning and effectively locks developers and organizations into its ecosystem.

TSMC is the contract manufacturer that actually builds the vast majority of advanced AI chips. Its results show how valuable that role is. In Q2 2025, TSMC reported $30.07 billion in revenue with 58.6% gross margin and 49.6% operating margin. Most of its sales came from leading-edge processes which it has a virtual monopoly over allowing it to charge premium prices.

The bottom line is that semiconductor revenues and profits vastly outstrip those of AI applications. While labs generate billions in revenue, Nvidia and TSMC generate tens of billions in profit. The imbalance confirms that the bottleneck at the top of the stack is where the surplus flows.

Private equity and asset managers finance the build-out

Private equity and large asset managers are central to this build-out, supplying development equity, project finance, and private credit in exchange for fees, interest, and upside tied to long leases and power contracts. On the credit side, the new GPU-backed lending structures and headline facilities noted above are quite illustrative. CoreWeave’s $7.5 billion debt-financed expansion was led by Blackstone and Magnetar. These investors effectively convert future compute and lease commitments into cash today—speeding construction while shifting utilization and operating risk onto the independent clouds and tenants that must keep the hardware busy.

On the equity and development side, global asset managers are co-funding campus-scale data centers through joint ventures with REITs and operators as well. Equinix has struck multi-billion-dollar joint ventures (over $15 billion) with sovereign and pension capital—GIC and CPP Investments among them—to expand its xScale hyperscale platform in the U.S., while also creating project-specific joint ventures such as a $600 million partnership with PGIM Real Estate in Silicon Valley. Meanwhile, large buyout firms are tying power and data-center supply together. Blackstone reports more than $70 billion of data-center assets and another $100 billion in its pipeline, and has publicly outlined plans to invest $25 billion in Pennsylvania across data centers and generation to meet AI loads.

Sovereign and public investors in Asia are also channeling capital into this stack. Temasek joined the AI Infrastructure Partnership alongside Microsoft, BlackRock, and MGX, which targets $30 billion+ initially and aims to mobilize up to $100 billion for AI-driven data centers and energy systems—an explicit example of sovereign-backed capital buttressing GPU-centric build-outs. The net effect is that private equity and asset managers convert future compute and lease commitments into cash today, accelerating construction while shifting performance and utilization risk onto operators and tenants, and locking in upstream revenues for chipmakers and power/land owners.

The AI value chain funnels surplus upward, but each layer has a distinct role.

We can certainly widen the scope of actors to include communities, politicians, business coalitions, upstream energy firms, and more. But for now, let’s set those wider entanglements aside and simply recap the map so far:

AI labs and indie GPU clouds (downstream): These are the teams building and running AI products. They often grow revenue fast but spend even faster on computing time and equipment. Because they sign multi-year contracts for capacity, they can end up owing a lot even if usage or sales fall short.

Hyperscale clouds (midstream): Big platforms bundle chips, power, networks, and software and rent them to everyone else. They’re profitable at scale, but only if their giant data centers stay busy. Their main worries are overbuilding, power costs, and slower-than-expected demand.

Utilities and data center REITs (physical enablers): Power companies and data-center landlords control the basics—electricity, grid connections, cooling, fiber routes, and suitable land. They earn steady returns through regulated rates or long leases, and they’re less exposed to whether an AI app succeeds. Their risks are political and permitting delays, not product cycles.

Chip designers and foundries (upstream): The firms that design and manufacture the most advanced chips sit at a bottleneck. Because their products are scarce and essential, they capture the richest profits. If AMD or custom silicon designs from hyperscalers gain traction, Nvidia’s margins could compress. Similarly, if new foundries emerge or geopolitical tensions disrupt Taiwan, TSMC’s pricing power could change.

Private equity, asset managers, and sovereign funds (the capital layer): These investors finance the build-out across all the layers. They turn future rents and power contracts into cash today through joint ventures, project finance, and loans (including ones backed by GPU hardware). They collect fees and interest while most day-to-day operating risk stays with the companies using the assets.

You’ll notice I’ve set aside end-user demand (the usual focus of AI “use case” reporting). That’s because, right now, speculative capital—especially venture capital—underwrites the buildout on the strength of its own demand imaginaries. In this frame, AI labs—the “miners” in the analogy—function as the effective customers, lured by dreams of striking it rich. The main point is that most of the profit ends up where scarcity and long contracts live—chips, power, and prime data-center sites. The capital layer speeds everything up by supplying money, but it also spreads risk downward to the operators that must keep the machines full and the bills paid.

The current AI boom is a rent-seeker’s paradise.

The Gold Rush remade not only fortunes but also landscapes and communities. Indigenous nations faced catastrophic demographic collapse and forced labor regimes; Mexican communities saw land and political standing eroded through lawfare, taxation, and violence. In both cases, scarcity rents from gold (and later land and water) accrued upward, while risk and dispossession were pushed onto subordinated peoples. Mining devastated rivers, forests, and soils, while boom towns sprang up and collapsed with the busts. Many migrants and miners left with little to show but debts, while a handful of merchants, landlords, and financiers accumulated enduring wealth.

What happens after the rush is just as important. The flood of bullion swelled the money supply, loosened credit, and subtly re-priced trade and labor across the Atlantic world—effects that outlasted the rush itself. The AI boom may not scar mountainsides or poison streams (for the most part), but it carries its own forms of extraction—of electricity, land, and labor—that is similarly repricing inputs, rewiring infrastructure, and shifting bargaining power among regions, firms, and labor classes long after the speculative period has passed.

In sum, the AI boom is a layered economy in which profits and risks are distributed unevenly. Money flows into flashy applications and speculative bets, but much of it ends up as revenue for hyperscalers, power providers, and chipmakers. The sustainability of this structure depends on whether AI adoption grows fast enough to justify the “cloud debt” being accumulated today. If it does, downstream firms may eventually capture more value. If it does not, many of them will bear the cost of an infrastructure build-out that enriched their suppliers more than themselves.

AI labs (and venture capitalists), of course, price failure into the model; power-law returns mean a few wins can cover many losses. But that doesn’t mean they absorb all the downside.

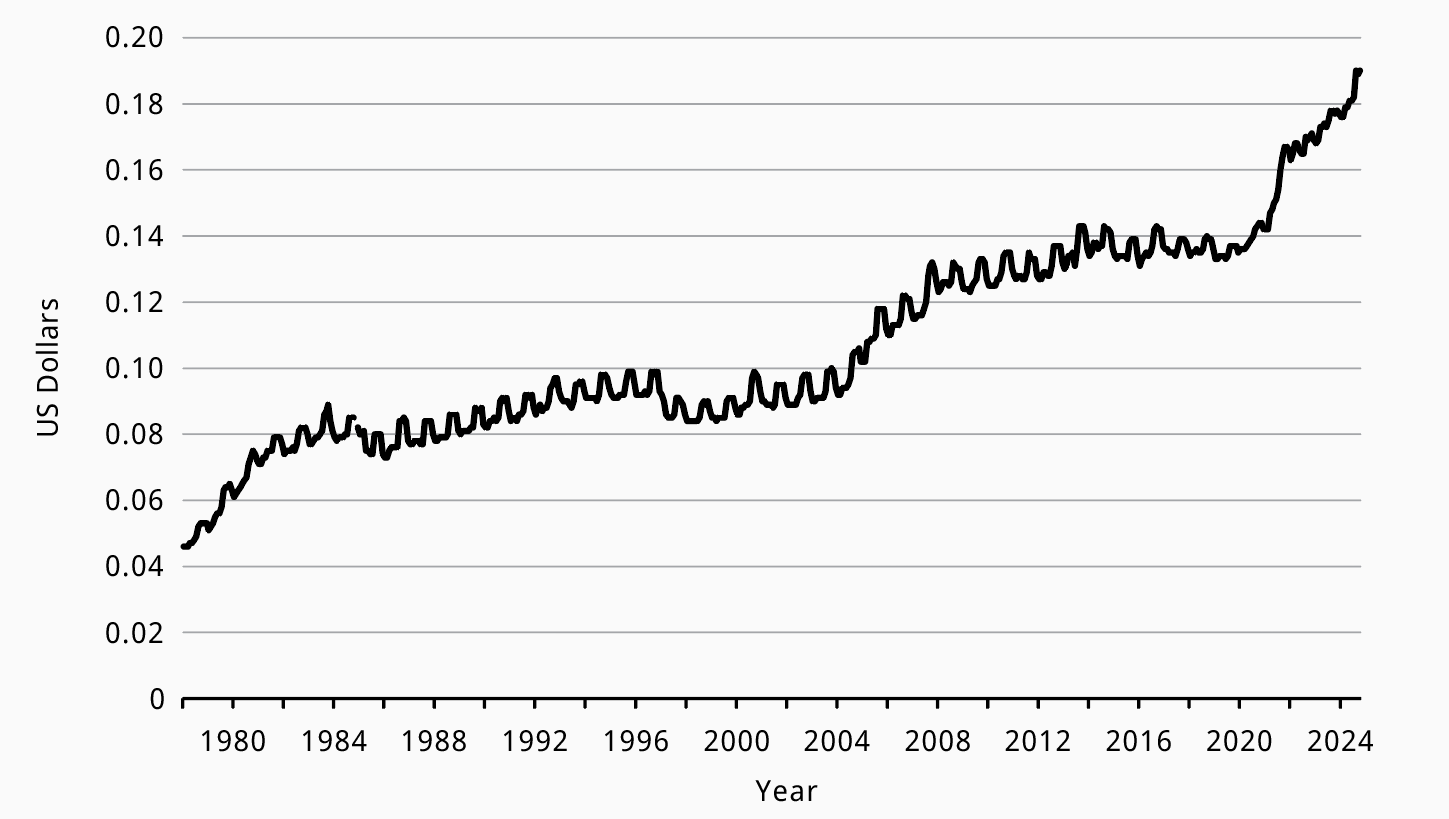

The most consequential outcomes won’t show up in valuation charts so much as in rate cases, power contracts, and water rights—where costs are socialized and baked into everyday life. One important area of value extraction and transfer is the public. Communities are already bearing the costs as value flows up the chain. Reports in mainstream outlets are circulating charts like the one below on the rising energy prices, with a substantial driver being data center buildouts.

palantir...the cia....plus they are bad for our rights plus they are bad for our rights https://open.substack.com/pub/outlawedbyjp/p/a-dark-cloud-lingers-the-evil-youre

good article. Sharing a similar one I had written a while ago

https://open.substack.com/pub/pramodhmallipatna/p/the-ai-value-stack-mapping-the-2025